I have recently spent some time thinking about how hardware can be architected and optimised to better support high-level dynamic languages such as Python and JavaScript. There appears to be a significant gap between the way processors and memory systems are built, which is to prioritise performance, and the characteristics of dynamic-language workloads, which prioritise ease of use. I argue that dynamic languages are setting the direction of travel in the way we use computers and this gap presents a huge opportunity for new hardware innovation.

Table of contents

- Dymamic languages

- Python and AI

- Python performance

- Hardware impacts on Python performance

- Hardware support for Python

- Summary

- Acknowledgements

- References

- Related links

Dynamic languages

According to Stack Overflow’s 2023 developer survey, JavaScript has now been the most commonly-used language for the last 11 years and Python has become the third most commonly-used language amongst all developers and the first amongst non-professional developers and those learning to code. Nestled amongst the most common languages are also TypeScript (a variant of JavaScript), C#, PHP, Lua and Ruby. Applications of these languages are wide ranging and varied, across all aspects of industry, science, business and government.

Dynamic languages have become popular because they are easy to use when compared with their statically-compiled counterparts. There are many aspects of the languages that contribute to this, such as dynamic typing, high-level features, no requirement for a compilation step to produce an executable format (leading to rapid iteration times), portability between platforms, powerful debugging due to runtime introspection, integration with editors and IDEs and natural support for metaprogramming. Ease of use improves programmer productivity and widens participation to non-professionals and those without expertise in low-level programming. These benefits are also a critical factor in the development of new application areas and technologies such as AI, where research and practice moves rapidly and participation across academia and industry is broad.

The cost of these benefits when compared with compiled languages is a runtime performance overhead due to the additional work the language implementation must do, for example to resolve names and types. The overhead depends on the workload, but is often in the realms of tens to hundreds of times slower. The evidence in the use of dynamic languages however is proof that this performance overhead is acceptable price to pay for their benefits. Having said this, there are ongoing substantial efforts to close the gap by optimising the language implementations and compilation strategies. As far as I can see, this effort has not extended to optimisation of the underlying hardware.

Python and AI

In this remainder of this note I will focus on Python and its application to AI, a domain that is significant enough to cause the development of new computer hardware and rapid adoption of new process, integration and packaging technologies.

Python has established itself as the main programming language in AI, and this is due to ease-of-use considerations. Programming in AI is typically done using frameworks, meaning a library that provides facilities to express a computation that is compiled and run within the program, rather than expressing the computation directly in the programming language. TensorFlow and PyTorch are two pre-eminent examples, but with PyTorch having taken the lead in becoming the most widely-used framework. TensorFlow established itself early with support from Google, but it has lost its dominance to PyTorch because PyTorch was easier to use and more flexible. It was thus more widely adopted and more quickly applied to new application areas.

PyTorch’s first design principle is usability over performance which clearly indicates ease of use is the driving force in AI model development and deployment. PyTorch’s third design principle is Python first meaning that working in Python natively (using the features of the language) provides the best experience and results for users, rather than deferring to optimised compiled-language libraries. PyTorch’s primacy and clear prioritisation of ease of use indicates the direction of travel: that Python will continue to become a first-class citizen in AI programming and so its performance will be increasingly under scrutiny.

Since around 2012, AI has undergone a renaissance by scaling the performance of deep neural networks with GPUs. Looking forward, there are many ways in which AI models are expected to develop, requiring programming techniques and hardware to develop to provide these capabilities too:

-

Model size is growing and will continue to grow. Although GPT-3 has 175 bn parameters, there are an estimated 86 bn neurons in the human brain and an order-of 100 tn parameters (albeit encoded using analog mechanisms). It is likely that sparsity will increasingly be required to train and access these models efficiently.

-

Conditional sparsity through conditionality in the structure of a network, eg routing of activity based on the data. In a dense network, every input interacts with every weight, but our brains don’t fire all neurons in response to every stimulus, and unconditional sparsity that is not dependent on the input, such as from pruning of connections.

-

Symbolic representations. Symbolic AI programs are based on creating explicit structures and behaviour rules. This approach was the dominant paradigm in AI from the 1950s up to the mid 1990s. It is however considered a complementary technique to deep learning, possibly reflecting the fast and slow parts of the human cognitive system. Examples are decision trees and PAC learning.

-

Composition. New models will be created from parts such as whole sub models, or other reusable components. This is the way any complex system is constructed, including our brains.

Python performance

Broadly, the performance of Python programs can be improved at three levels:

- Optimising the application.

- Optimising the language implementation.

- Optimising the hardware.

The work in Quantitative Overhead Analysis for Python [1] provides a detailed analysis of overheads in CPython. 1 The different types of overhead are described in the following table, which is taken from the paper.

| Group | Overhead category | Description |

|---|---|---|

| Additional language features | ||

| Error check | Check for overflow, out-of-bounds and other errors | |

| Garbage collection | Automatically freeing unused memory | |

| Rich flow control | Support for more condition cases and control structures | |

| Dynamic language features | ||

| Type check | Checking variable type to determine operation | |

| Boxing/unboxing | Wrapping or unwrapping integer or float types | |

| Name resolution | Looking up variable in a map | |

| Function resolution | Dereferencing function pointers to perform an operation | |

| Function setup/cleanup | Setting up for a function call and cleaning up when finished | |

| Interpreter operations | ||

| Dispatch | Reading and decoding bytecode instruction | |

| Stack | Reading, writing, and managing VM stack | |

| Const load | Reading constants | |

| Object allocation | Inefficient deallocation followed by allocation of objects | |

| Reg transfer | Calculating address of VM storage | |

| C function call | Following the C calling convention in the interpreter |

The following charts (also taken from the paper) show the proportions are given as a percentage of total execution time, based on the measured execution of a set of benchmarks. On average, 64.9% of overall execution time is overhead, and the remaining 35.1% is used for the execution of the program. Of the language features, name resolution and function setup/cleanup dominate. Of the interpreter operations, dispatch (reading bytecode and executing the correct operations) and C function calls dominate.

There has been significant work on tackling (2) the language implementation. Prominent examples include: PyPy, an alternative optimised implementation written in Python that includes a just in-time (JIT) compiler to dynamically optimise common code paths; Cinder is Meta’s internal performance-optimised version of CPython that includes various performance optimisations and a per-method JIT; Cython translates Python to C/C++ for static compilation and execution in the CPython runtime environment; Nuitka is similar to Cython, as a compiler from Python to C, using the CPython interpreter as a library; And not forgetting CPython itself has an active project led by Microsoft and Guido van Rossum called Faster CPython to explore performance optimisations. Approximately, these approaches achieve speedups of up to an order of magnitude when compared to the standard CPython implementation.

Where the previous examples optimise Python as a general-purpose language, some approaches such as Codon, Numba and Triton compile subsets of Python into machine code for host or accelerator devices, eliminating the runtime overhead altogether. These approaches can achieve speedups of the order of 100 times for serial execution. They focus however on accelerating numerical computations and therefore sidestep the difficulties of statically-compiling dynamic features such as naming, large integers and data structures, which are much more challenging.

Within the scope of (1) optimising the application, a significant issue

preventing the use of parallelism is Python’s Global Interpreter Lock

(GIL). This lock allows only one thread to execute the interpreter at

once, which was done originally to make the counting of object references

simpler. It is possible to work around the constraints imposed by the GIL, such

as with the multiprocessing module but this makes it difficult to express

different types of parallelism and creates an ease-of-use problem. A

PEP submitted by a PyTorch developer makes the case for removing the

GIL, outlining motivating examples in scientific and numerical computing, and

tasks using Python for coordination and communication. A decision on adoption

of this PEP is yet to be made but a reference implementation is available.

There are various sources of overhead in the execution of a Python program that can contribute to orders-of-magnitude slower runtime compared to a compiled program. Strategies to reduce this overhead are in optimising the sources of overhead directly by improving compilation strategies, improving runtime strategies (such as caching of accesses or performing JIT compilation to machine code of frequent code paths), statically compiling Python code to C code including inline calls to the interpreter, and, for restricted subsets of Python, statically compiling Python code to machine code without the interpreter. These approaches are all within the software domain, so it is interesting to consider in what ways computer hardware could be optimised to further reduce the runtime overheads in Python programs.

Hardware impacts on Python performance

The main findings from the microarchitecture investigation in [1] summarised below provide a useful basis for understanding how Python is limited by current computer hardware. The study is based on a range of benchmarks run with CPython and PyPy with and without JIT.

-

ILP. Both CPython and PyPy exhibit low instruction-level parallelism. This suggests that choosing a deeply-pipelined out-of-order core may not provide good tradeoff between silicon area and performance. A simpler, in-order core may instead be a better choice, particularly when building a parallel processor with many cores.

-

Working memory. Across all the benchmarks, large caches do not provide a performance benefit, implying that working sets tend to be relatively small.

-

Nursery sizing. A critical factor related to working memory is an area used for the allocation for short-term objects called a nursery. When the nursery does not fit in cache, performance is impacted due to cache thrashing. However, there are two things to consider: reducing the nursery size will increase garbage-collection overheads and the optimal nursery size is dependent on the application.

-

JIT. When JIT compilation is used, the memory system is put under more pressure, so although the number of instructions executed reduces, the latency of memory operations increases due to cache line misses. This implies the working memory increases and so accordingly the overhead of garbage collection also increases.

It is clear from these findings that optimising the memory system will yield a more significant performance improvement than optimising the processor microarchitecture and instruction set design (as is typically the focus of new processor designs). Orthogonal to optimisations in the memory system, providing more execution parallelism at the process level is the only other way to significantly scale performance. This gives us the basis for a new Python processor.

Hardware support for Python

This section presents some rough ideas on what new computer hardware might look like that optimises the execution of Python (or indeed other dynamic languages).

System architecture

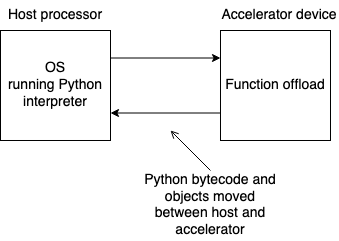

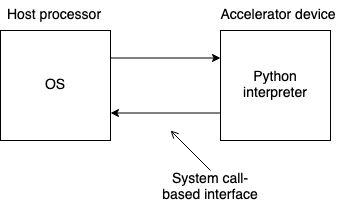

The most straightforward way to deploy a new processor chip is as an accelerator to a conventional host processor connected via PCIe. The host processor can then offload parts or all of the Python workload to the accelerator for improved performance. The following diagrams show two ways that this could work: one where the host runs the Python interpreter and offloads parts of the Python program (similar to the way Eager Mode works in PyTorch and TensorFlow); the other where the accelerator runs the Python interpreter and communicates to the host via a standard set of system calls. The first scenario means that execution can fall back onto the host if the Python code was not supported by the accelerator, thereby allowing the design of the accelerator to be simpler. However, managing the communication of Python objects over this boundary is complicated and potentially a significant overhead. In the second scenario, the whole Python program is executed by the interpreter running on the accelerator. This simplifies the interface between the two devices, which would only need to provide basic system calls to the interpreter.

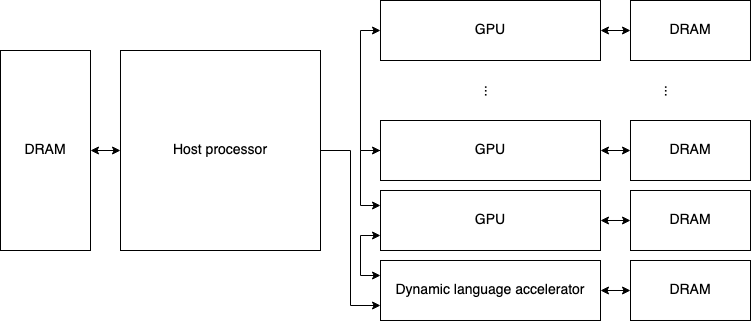

At a system level, an accelerator device for Python might be integrated between the host processor and other accelerators (typically GPUs in data-centre-type deployments) since Python will be responsible for coordinating offload of computations. Access to external memory can either be to DRAM via the host or on DRAM integrated with the device. The latter has the benefit of being able to supply lower latency and higher bandwidth to the memories.

Core architecture

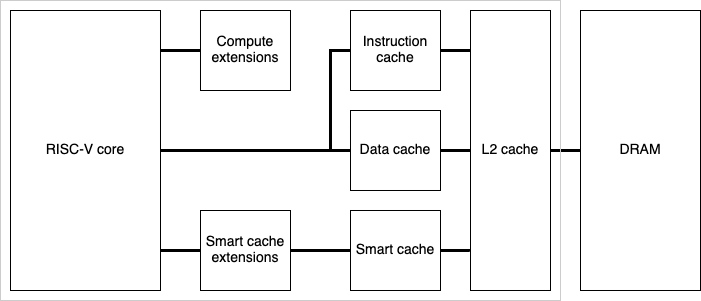

At the core level, it makes a lot of sense to use RISC-V as the base architecture because it is a general-purpose ISA that is open and easily extensible. As previously noted, the RISC-V microarchitecture can be kept simple because interpreting Python is not heavily dependent on ILP. The complexity of the microarchitecture depends on the best tradeoff between sequential and parallel performance, which in turn depends on the workload. AI for example will be weighted towards highly-parallel execution, so is better suited to a large number of simple processor cores. Extensions to the core can be added to provide optimised support for specific operations (eg floating-point arithmetic and for matrix multiplication), support for concurrency (threading, synchronisation, communication etc) or control over the memory hierarchy.

Memory system

One way to improve the way memory is managed is to bring some level of control

of the cache to the processor. In the following diagram, A RISC-V core has

extensions that allows it to control a ‘smart cache’. Such a system could

enable aggressive caching of computations that are frequently recomputed, such

as in the processes of name and function resolution, or from calling a function

with the same arguments, which account for a substantial portion of the

language overhead. A motivating example is caching of the C strlen function

that is used frequently by the Python interpreter in name resolution. A

difficulty in caching this function entirely in software is that a full string

comparison is required to determine whether to invalidate the cache or not, and

this is roughly as expensive as strlen. A hardware caching scheme could be

implemented more simply by snooping memory writes and invalidating the cache

entry if any part of the string has changed. To support this, hardware

extensions would be required to place items in the cache, retrieve items in the

cache, monitor ranges of memory and invalidate items in monitored ranges.

Another memory-system optimisation is to provide garbage collection (GC) as a hardware-managed function. GC is a technique that has been studied for decades, including in hardware: it was first introduced in Lisp in the 1950s and appeared as a hardware extension in the 1981 Intel iAPX 432 (see [4] for a literature review of hardware GC techniques and implementations). Surprisingly, hardware GC has never caught on and this is certainly related to the challenges of a performant solution requiring integration across many levels of abstraction: microarchitecture, architecture, tooling, operating systems and languages. However, it seems that this precedent should be challenged given that GC is central to the way dynamic languages work.

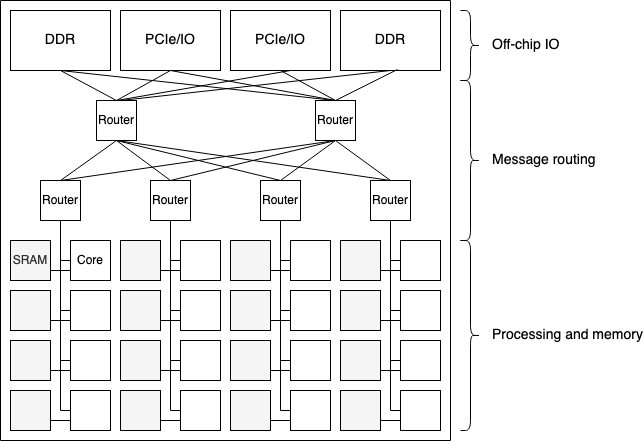

Scaling the number of cores

Building a system with multiple processing cores can be done in a conventional way by sharing access to higher levels of cache and adding a coherency mechanism for lower-level private caches, but this does not scale well past a few tens or hundreds of cores due to the overheads of maintaining coherency. A different and more radical approach is to use a distributed-memory architecture where each core has a private memory and communication between cores or to external DRAM is performed explicitly using messages. The major benefit of such an architecture is that is scalable to large numbers of cores, providing more performance from parallelism, and that processor-memory pairs can flexibly be used as units of memory or processing dependent on the application. This approach generalises the concept of a programmable cache hierarchy by using processor-memory pairs as units of memory with the processor providing access logic and building arbitrary caching schemes in software, dependent on the characteristics of the workload. I have previously written about this idea in my work on emulating large memories. Alternatively, processor-memory pairs can be turned over to processing and used to execute parts of an application in parallel, a great example of which would be running one or several JIT compiler processes concurrently with the application.

The following diagram sketches how such a machine might be built. The main building blocks are a processor-memory tile and a router. The tile includes communication facilities, allowing it to communicate to all other tiles in the system and to off-chip communication interfaces via routers. The arrangement of routers and tiles is flexible but the routers have enough links that high-dimensional topologies can be created to provide communication with bounded latency and throughput, which is essential for a general-purpose machine. The operation of the communications fabric could be dynamic allocated with packet switching, or statically allocated with circuit switching. Special packet types can be used for accessing external memory or other off-chip IO, or for implementing shared-memory operations such as direct access to remote tile memories. Collective operations such as scatters and gathers are likely to be frequently used, so could be optimised with supporting hardware in the tile and routers. These are the essential ingredients of a general-purpose fine-grained parallel machine. I have previously outlined a similar kind of machine in my PhD thesis, and I intend to post a note with a revised and fuller description at some point soon.

Summary

This note makes the argument that ease of use the critical factor in the development of new application areas and the adoption of new computer hardware. Ease of use has in many regards won as the most important factor in software and programming, but new computer hardware prioritises performance. Closing this gap would mean that rapidly-developing application areas such as AI would benefit by moving the boundary away from optimised low-level libraries, making fuller use of languages such as Python. For computer designers, there is a huge opportunity for new architectural innovations that support a radically different workload from conventional low-level compiled languages. Contrary to conventional wisdom, hardware is easier to innovate rapidly and it is software that bears the weight of legacy.

Acknowledgements

The hardware ideas in this note were developed in conversations with James Pallister. Closely related to some of the ideas explored is a new UK startup VyperCore co-founded by Ed Nutting who are building a RISC-V-based processor that includes facilities for hardware memory allocation and GC for performance and safety.

References

-

Mohamed Ismail and G. Edward Suh, Quantitative Overhead Analysis for Python, 2018 IEEE International Symposium on Workload Characterization (IISWC). [IEEE, PDF]

-

Nagy Mostafa, Chandra Krintz, Calin Cascaval, David Edelsohn, Priya Nagpurkar, Peng Wu, Understanding the Potential of Interpreter-based Optimizations for Python. UCSB Technical Report #2010-14 August, 2010. [PDF]

-

Gergö Barany, Python Interpreter Performance Deconstructed, Proceedings of the Workshop on Dynamic Languages and Applications, June 2014. [ACM, PDF]

-

Andres Amaya Garcia, Integrated hardware garbage collection for real-time embedded systems, PhD thesis, University of Bristol 2021. [University of Bristol, PDF]

Related links

- Why Python is Slow: Looking Under the Hood, Pythonic Perambulations blog (2014).

- Accelerating Python Today, Intel technical note, James Reinders.

-

See [2] and [3] for other similar analyses that [1] builds on. ↩

![Python overheads measured in various benchmarks, from [1].](/thumbs/measured-overheads_1000x1000.png)